OpenAI Announces o3: The Path to AGI Now Stands Unobstructed

After twelve days of streaming, OpenAI's grand finale has arrived. Sam Altman, returning amidst the Christmas atmosphere, unveiled the company's latest masterpiece: OpenAI o3.

This release once again pushes the boundaries of AI capabilities to new heights, reinforcing OpenAI's unshakeable position on the iron throne of AI advancement.

It brings to mind what an OpenAI researcher said before the release of o1: "On our path to AGI, there are no more obstacles in our way."

Why did OpenAI skip directly to o3 without releasing o2? The answer is quite simple: to avoid potential trademark conflicts with O2, the British telecommunications provider.

As soon as OpenAI's stream concluded, X erupted with excitement.

o3's capabilities represent a dimensional leap over all existing models. Let's examine its impressive benchmarks:

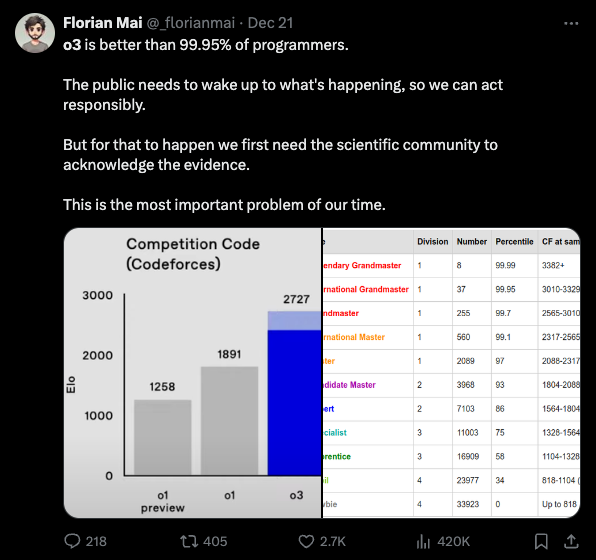

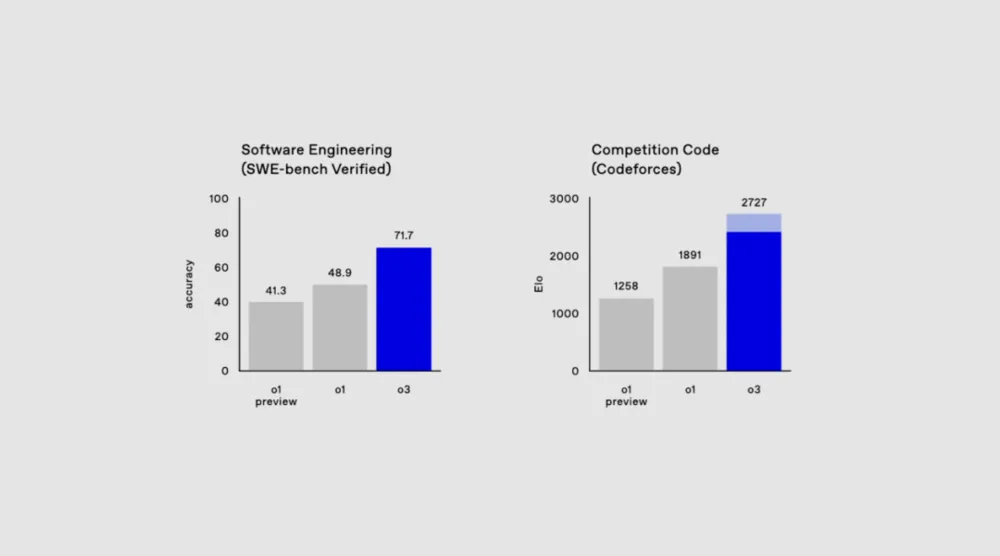

Software Engineering and Coding Excellence

The model underwent evaluation on two significant benchmarks:

-

SWE-Bench Verified (Software Engineering Examination): o3 achieved a remarkable 71.7% score, significantly outperforming o1.

-

Codeforces: o3 scored 2727, ranking 175th globally and surpassing 99.99% of human participants.

o1's coding capabilities were already extraordinary, and o3 has taken another giant leap toward the summit of AGI.

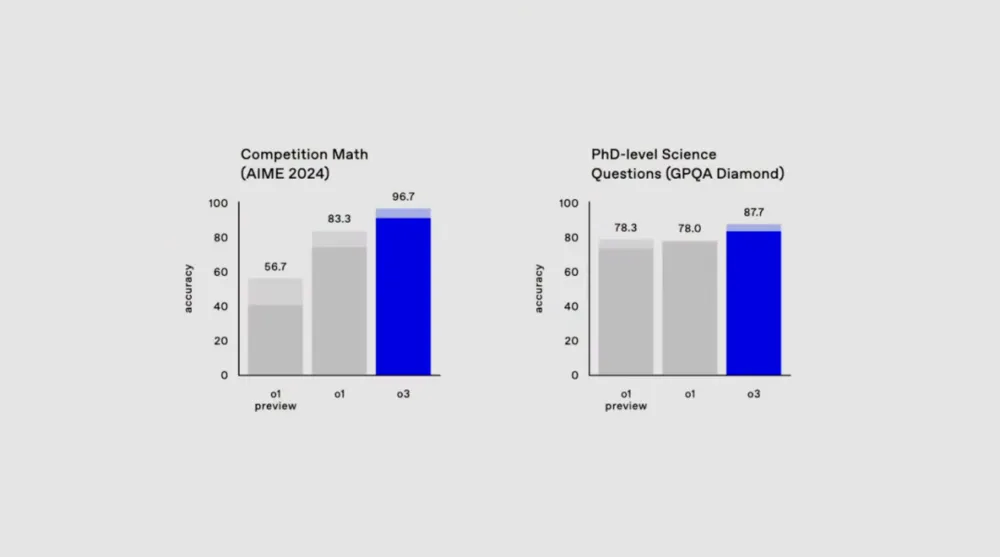

Mathematical and Scientific Prowess

In mathematical competitions and scientific examinations, o3 demonstrated exceptional capabilities:

- AIEM 2024: Nearly perfect score, marking the first time an AI has achieved such a feat

- GPQA Diamond (Doctoral-level Scientific Examination): Showed improvements, though not as dramatic as in mathematics and programming

The next mathematical benchmark is particularly interesting.

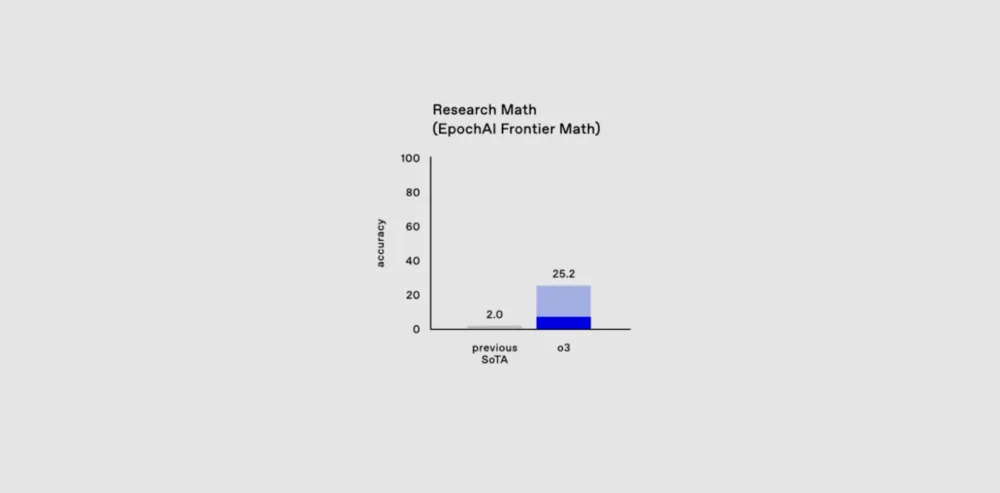

The FrontierMath Breakthrough

On FrontierMath, a benchmark developed by Epoch AI in collaboration with over 60 top mathematicians, o3 achieved a groundbreaking 25.2% success rate. This is particularly significant considering that previous models like GPT-4 and Gemini 1.5 Pro struggled to reach even 2% on this benchmark.

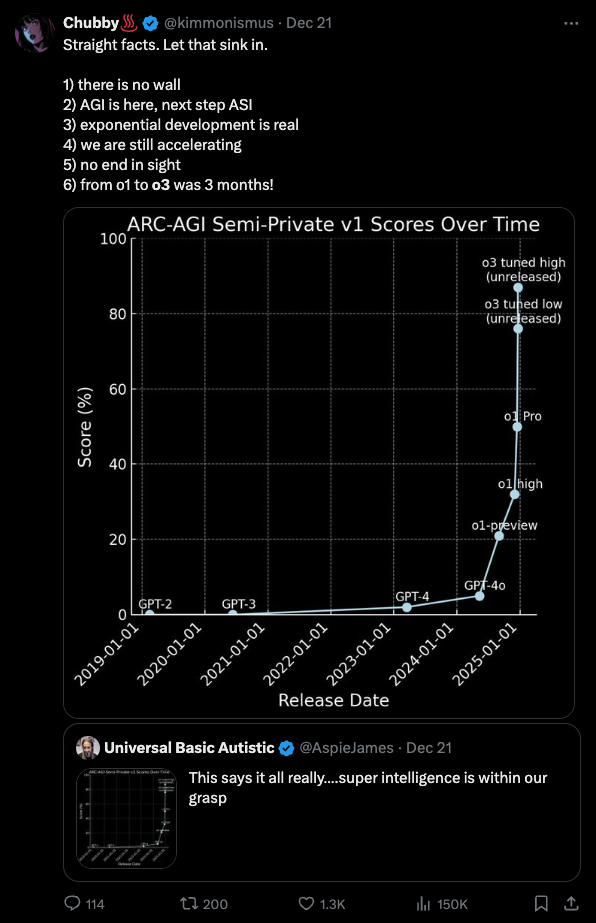

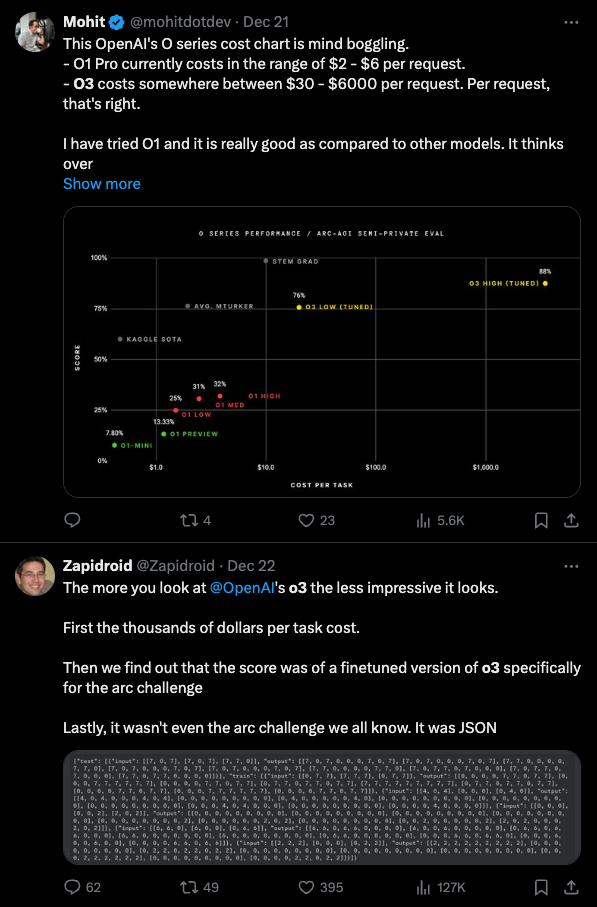

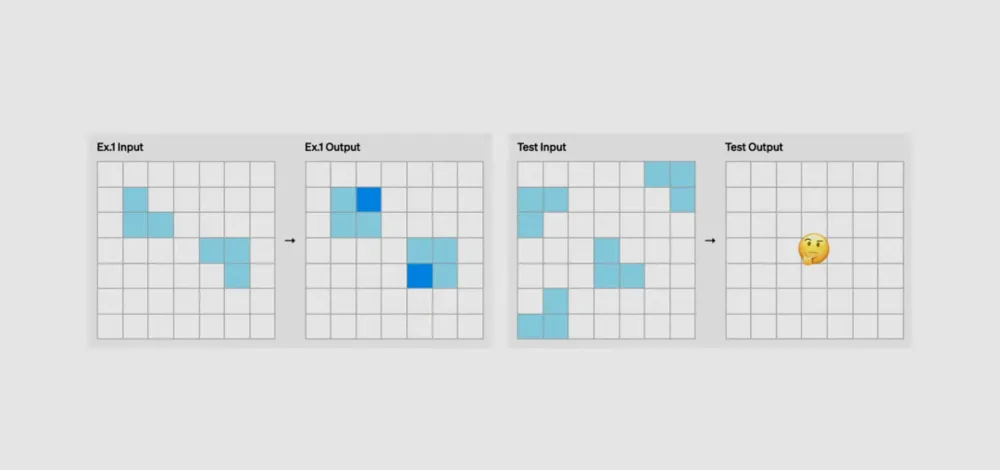

The ARC-AGI Achievement

Perhaps the most remarkable achievement is o3's performance on ARC-AGI, a benchmark designed in 2019 to test AI systems' abstract reasoning capabilities. The test requires AI to identify patterns and solve new problems, presented in grid format with ten possible colors per square, ranging from 1x1 to 30x30 grids.

It looks something like this:

Extremely difficult and abstract.

Here's the progression in scores:

- GPT-2 (2019): 0%

- GPT-3 (2020): 0%

- GPT-4 (2023): 2%

- GPT-4o (2024): 5%

- o1-preview (2024): 21%

- o1 (2024): 32%

- o1 Pro (2024): ~50%

- o3 (2024): 87.5%

It took five years to progress from 0% to 5%, but now, the jump from 5% to 87.5% happened in just six months. The human threshold score for this test is 85%.

The path to AGI truly stands unobstructed.

However, while o3 is powerful, it remains a future promise.

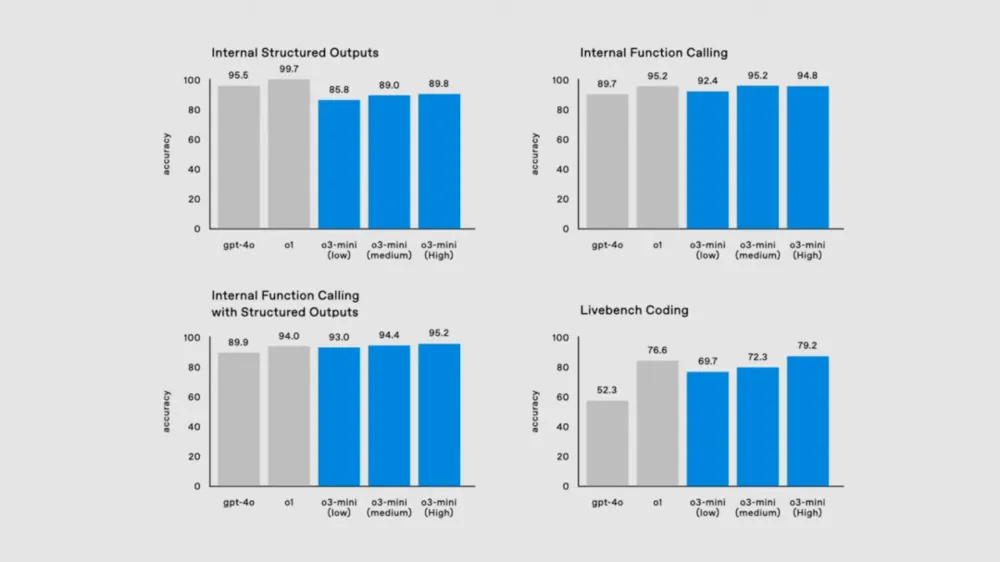

OpenAI has developed three smaller o3 models based on the main o3.

Currently, o3-mini is expected to be publicly available by the end of January.

I'm increasingly excited about the AI industry's evolution in 2025. Inference models, agents, AI hardware, and world models - each of these areas promises to be more exciting than the transitional period of 2024.

2025 will truly be the year when AI reaches for the stars.